Introduction & Motivation

Techniques including Chain-of-Thought (CoT) Prompting [1,2] and Self-Consistency [3], as well as reasoning-enhanced models, e.g., OpenAI-o1, DeepSeek-R1, and KIMI-k1.5 [4-6], have all contributed to improvements in multi-step reasoning for solving hard problems.

Discussions in the community suggest that advanced reasoning capabilities in LLMs mainly stem from two factors:

- foundational knowledge acquisition through massive pretraining on diverse data;

- strategic refinement via post-training interventions like supervised fine-tuning (SFT) or reinforcement learning (RL).

In recent weeks, much attention has been focused on reproducing R1, i.e., improving the reasoning abilities of LLMs of varying scales. However, our investigations reveal a critical lacuna of this paradigm: we observe that diverse models, from the small Llama3.2-3B-Instruct to the state-of-the-art DeepSeek-R1, suffer from systematical misinterpretation of the original problem during the reasoning process. The errors in foundational comprehension, rather than faulty logical sequencing, constitute a substantial proportion of inaccuracies in final outcomes.

Here are two examples:

As shown, Qwen2.5 makes errors because of misunderstanding the fact “restart the download” and DeepSeek-R1 fails due to assuming the distribution of cubic residues, which is not mentioned in the question.

To be more rigorous, we conduct a study of Qwen2.5-7B-Instruct on Gsm8k. We collect the incorrect reasoning outcomes on the Gsm8k testset and classify the cause for the error into two categories: “Misinterpretation of the Original Problem” and “Logical Reasoning Errors”, with the help of GLM-4-Plus. The plot below displays the statistics:

To our surprise, over 30 percent of reasoning errors stem from “Misinterpretation of the Original Problem” in this case. This finding makes us realize that many times, the failure to achieve correct reasoning results is probably not due to insufficient reasoning capability, but rather because the model is not reasoning on the correct problem.

We refer to this type of failure as “Factual Drift.” It means LLMs misinterpret, overlook, or hallucinate key contextual information during reasoning, thus making errors despite logically sound steps.

When shifting from small models to cutting-edge ones like DeepSeek-R1, we delightedly observe that the factual drift issue is alleviated to some extent, with an example listed below:

By dynamically paraphrasing critical constraints and conditions, DeepSeek-R1 implicitly performs error-checking to correct prior misunderstandings of the context. We refer to such a phenomenon as Self-Verification, which is also acknowledged by many other researchers in the community. However, such self-verification operates as a stochastic safeguard rather than a systematic protocol—it is not guaranteed to be triggered in various reasoning scenarios. Namely, the risk of factual drift remains and it can be significant considering the notable performance improvements made by our proposed SIFT (see below).

Given all these discussions, we finally arrive at the conclusion that

attention should be shifted from reasoning capacities to reasoning fidelity.

Namely, it is equally important to care about whether LLMs are reasoning about the correct problem.

The SIFT Framework

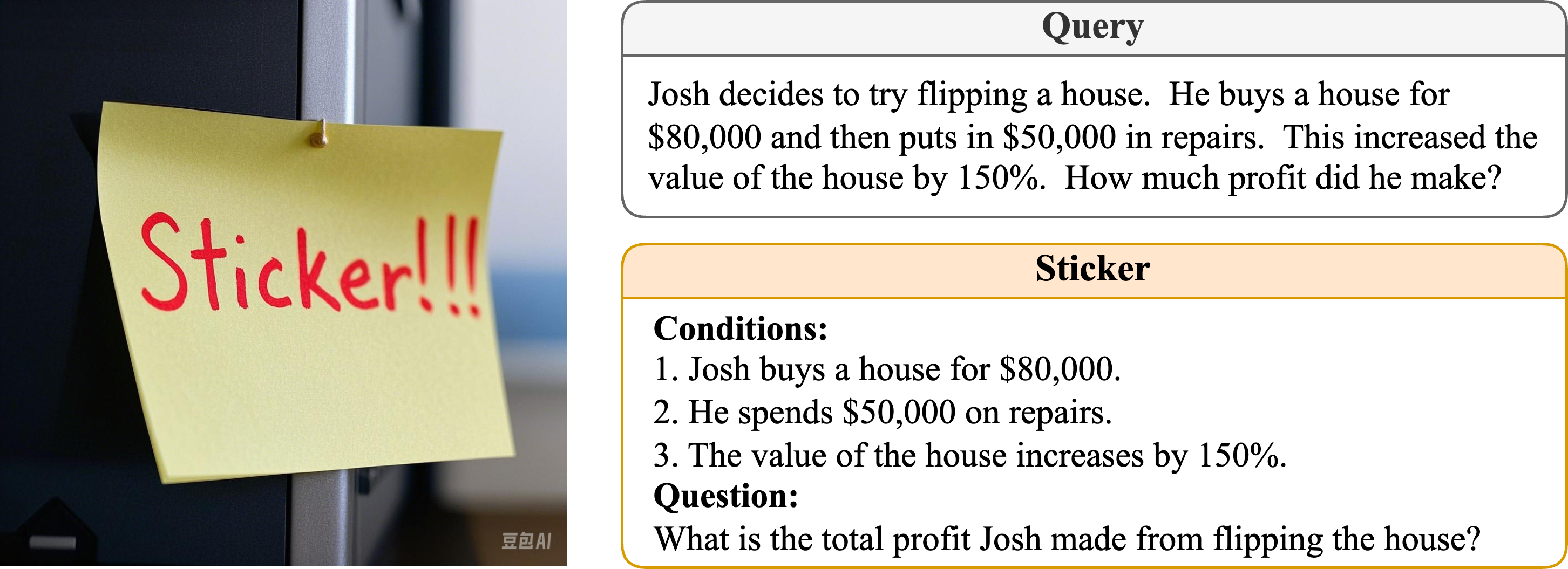

SIFT is a post-training approach, leveraging inference-time compute to improve generation quality yet without reliance on reward models as in Best-of-N (BoN) and Monte-Carlo tree search (MCTS). Concretely, SIFT lets the target LLM summarize key facts within the input query, including essential conditions and the core question, into a structured Sticker (see above), and make two predictions based on the Sticker alone and the query augmented with the Sticker, respectively.

If the predictions are the same, SIFT returns the prediction; otherwise, the Sticker is refined through bidirectional optimization—a forward one to better align the Sticker with the query and an inverse one to conform to the model’s reasoning preference—for more faithful reasoning.

The whole algorithmic procedure is exhibited below:

Namely, there are four core operations in SIFT:

- Sticker Generation (SG): The model extracts key facts (e.g., conditions, core questions) from the query and makes a structured Sticker.

- Consensus Prediction (CP): Predictions are generated from both the Sticker alone and the original query augmented with the Sticker. If they disagree, the Sticker will be refined.

- Forward Optimization (FO): The Sticker are adjusted to better align with the query’s semantics.

- Inverse Generation (IG): A new Sticker from the model’s prediction is inferred to match its reasoning preferences.

Their illustration is provided below:

Key Results

Experiments across models (3B to 100B+ parameters) and benchmarks (GSM8K, MATH-500, AIME2024) demonstrate SIFT’s effectiveness:

- Improves DeepSeek-R1’s accuracy to 85.67% on AIME2024 (vs. 78.33% baseline) and 77.33% on AIME2025 (vs. 69.80% baseline), setting a new SOTA in open-source models.

- Improves Llama3.2-3B-Instruct’s accuracy by 8.80% on MATH-500.

- SIFT shows consistent gains across architectures (dense/MoE) and scales, which proves its versatility.

Note that the 3 stages in the above results mean:

- Stage 1: Only SG and CP are used.

- Stage 2: Building upon Stage 1, FO is used to optimize the Sticker.

- Stage 3: The complete process outlined in Algorithm 1.

Besides, compared to self-consistency, another well-known baseline for verifier-free inference-time scaling, SIFT enjoys a better accuracy versus tokens scaling curve:

Why Does SIFT Work?

- Explicit Fact Highlights:Stickers externalize critical information, mimicking human sticky-note practices.

- Bidirectional Alignment: FO anchors Stickers to source semantics, while IG aligns them with the model’s reasoning style.

- Consensus Validation: It refers to that disagreements between Sticker-only and augmented predictions can trigger refinement so as to ensure factual fidelity.

- Read better to reason better: SIFT helps models better understand a query, align more accurately with the true intent, and fully leverage their capabilities.

As shown in the figure below, iterative optimization progressively improves prediction alignment, reducing factual drift.

Conclusion

Citation

If you find our paper or codebase useful, please consider citing:

@article{zeng2025sift,

title={SIFT: Grounding LLM Reasoning in Contexts via Stickers},

author={Zeng, Zihao and Huang, Xuyao and Li, Boxiu and Deng, Zhijie},

year={2025},

url={https://github.com/zhijie-group/SIFT/blob/main/paper.pdf}

}

Reference

[1] Takeshi Kojima, Shixiang Shane Gu, Machel Reid, Yutaka Matsuo, and Yusuke Iwasawa. 2022. Large language models are zero-shot reasoners. Advances in neural information processing systems.

[2] Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Fei Xia, Ed Chi, Quoc V Le, Denny Zhou, et al. 2022b. Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems.

[3] Xuezhi Wang, Jason Wei, Dale Schuurmans, Quoc Le, Ed Chi, Sharan Narang, Aakanksha Chowdhery, and Denny Zhou. 2023. Self-consistency improves chain of thought reasoning in language models. The Eleventh International Conference on Learning Representations.

[4] Aaron Jaech, Adam Kalai, Adam Lerer, Adam Richardson, Ahmed El-Kishky, Aiden Low, Alec Helyar, Aleksander Madry, Alex Beutel, Alex Carney, et al. 2024. Openai o1 system card.

[5] Daya Guo, Dejian Yang, Haowei Zhang, Junxiao Song, Ruoyu Zhang, Runxin Xu, Qihao Zhu, Shirong Ma, Peiyi Wang, Xiao Bi, et al. 2025. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning.

[6] Kimi Team, Angang Du, Bofei Gao, Bowei Xing, Changjiu Jiang, Cheng Chen, Cheng Li, Chenjun Xiao, Chenzhuang Du, Chonghua Liao, et al. 2025. Kimi k1. 5: Scaling reinforcement learning with llms.